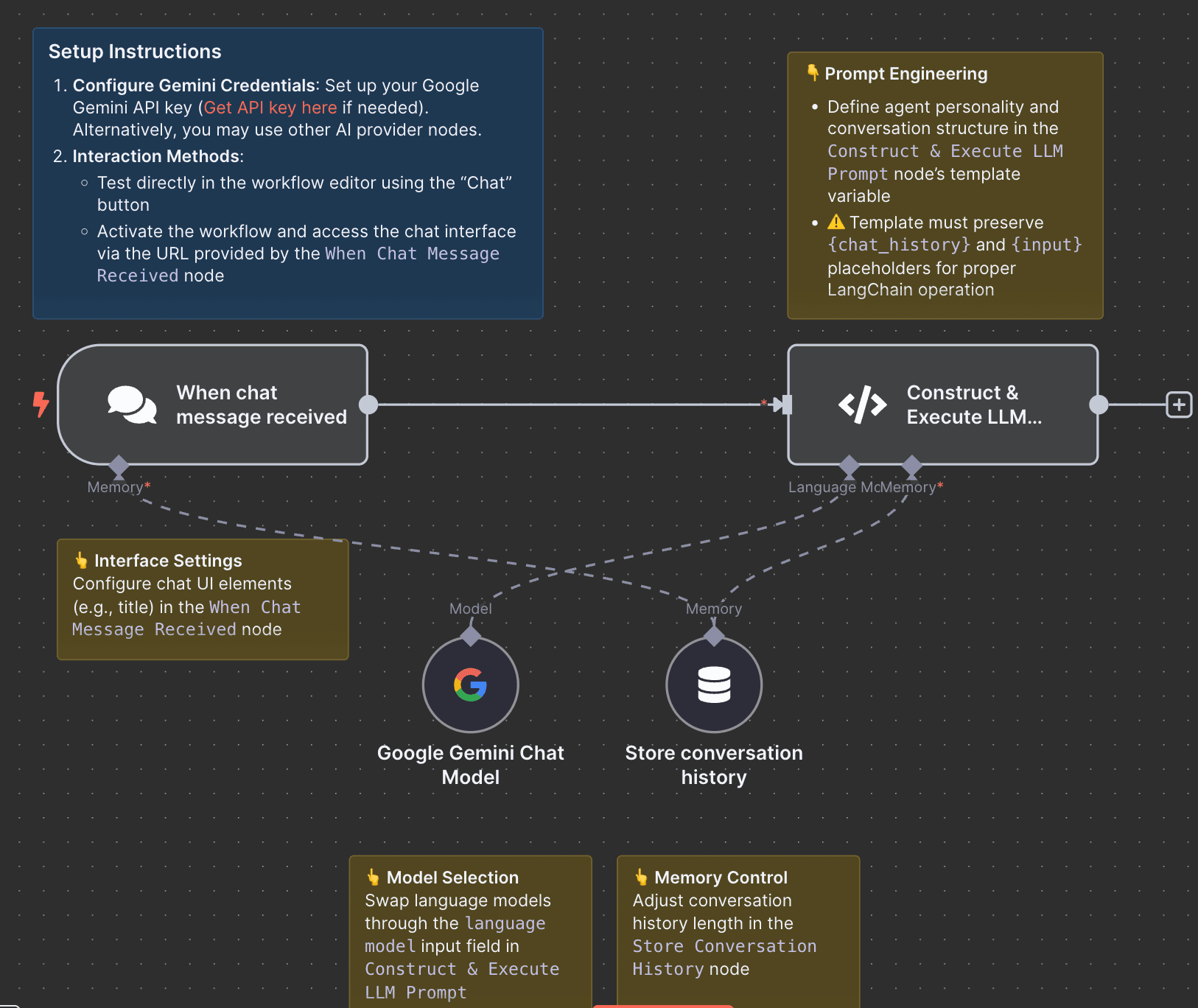

This workflow leverages the LangChain code node to implement a fully customizable conversational agent. Ideal for users who need granular control over their agent's prompts while reducing unnecessary token consumption from reserved tool-calling functionality (compared to n8n's built-in Conversation Agent).

When Chat Message Received nodeWhen Chat Message Received nodeConstruct & Execute LLM Prompt node's template variable{chat_history} and {input} placeholders for proper LangChain operationlanguage model input field in Construct & Execute LLM PromptStore Conversation History node⚠️ This workflow uses the LangChain Code node , which only works on self-hosted n8n.

(Refer toLangChain Code node docs)